Generative AI: The war on expression of the self

By Collin Shreffler

Generative AI: The war on expression of the self By Collin Shreffler Have you ever made something? Used your brain that took millions of years of evolution, and over a decade of schooling to develop? Good news: you’re ahead of the curve for the foreseeable future. Don’t start celebrating for being above-average just yet, however. Your new-found remarkability has come at a great cost, one that few are fully aware of. A cost that our ancestors could never even conceive of. You’re on this site, so you must know where this is going. Yet again, it's our old “friend”, generative AI. The arts have been a pivotal cornerstone of every single human culture for thousands of years. From the Louvre in Paris, to the China Academy of Art in Hangzhou, to uncontacted Amazonian tribes in the deep rainforests of Brazil, humans are born with the innate, still unexplained, desire for art and self expression. Art can be defined as nearly anything if you’re the post-modernist type, a creative outlet of self expression. This article is art, this website is art, jazz is art, packaging for products is too. From Michelangelo’s David to a box of Fruit Loops, art surrounds us, and has always reminded us of our humanity in the darkest of times. This is changing. Software is slowly robbing us of our last bastion of creativity. It used to be that skilled craftsmen would make everything anyone used with nothing but their bare hands and handcrafted tools. Eventually, this was replaced with equal shares of man and machine, a development few desired to protest. And why would they? The same quality products, but cheaper and more abundant. The only ones who protested were the craftsmen and artisans who were being undermined by machines, but were only seen as old fashioned and in the way of the progress of man. Fast forward nearly two centuries, and automation has arrived at the last stand of human creativity, and a medium previously left untouched by it. Modern artists that AI targets, the writer, the painter, and the musician, are fighting for their very existence, for the preservation of mankind’s last craft that, until now, was under no threat of extinction, and most have little idea of its importance. Unbeknownst to the majority, their struggle is the culmination of two centuries of an invisible war. One which man has never won a single battle. Automation may have many benefits for our modern way of living, but is there not a point where it goes too far? If the struggle against AI replacing our musicians, artists, and writers is lost, there are no more places in which to fight. Generative AI images, articles, books, and sounds are an affront to the arts of which man has preserved for thousands of years, and thanks to corporate interests and desires for profit and control, those arts are threatened to a severity greater than any other time in human history. Dictators, floods, fires, states and kings alike could never do the damage to the arts that generative AI is doing now. The arts are burning, have you the care to help extinguish the blaze that seeks to burn our expressions to cinders? Will you participate in the last battle for the preservation of human creativity? The front line is here, in the AntiAI movement. Enlist today.

AI: The Data Farm Singularity

By Collin Shreffler

As AI is continually advertised to us on every social media platform, in magazines, news articles, on television ads, every Google search, and even the platform I’m using to write this, Google Docs, with its built-in “Gemini” assistant, one has to wonder “why?”. Why has this been the biggest tech obsession since the advent of the solid-state transistor? Why have nearly a trillion dollars, pesos, Euro, pounds, and Yuan been thrown haphazardly at this seemingly sort of benin technology? It is free after all, the vast majority of AI models, whether they generate text, images, sound, or even generative video games, mostly are completely free, and sometimes even forcibly given to the user. Every Google search is turned into a prompt for an AI language model, regardless of the wishes of said user. Even more independent search engines like Brave have an AI assistant built into its browser by default. Thankfully, however due to user backlash, the option to opt out and turn it off was provided after a few months of its introduction. Why is this? Well, if you know how the internet works, and how it’s profitable, you know the answer, user data. Google makes most of its money from advertisements, most of those being shown strategically to users via collected and harvested user data. However, browsers have been slowly adding ways to combat data farming. Firefox, Opera, Brave, and Safari have all advertised data protection for its users. Safari being the most significant, as most IOS users will use it as their default search engine. With less data being harvested by Google, the advertisements they serve are less targeted, and therefore, less valuable to advertisers, affecting their bottom line. Google isn’t the main proponent of this, but is the company I’d like to focus on due to them being the most, shall we say, in your face about new AI tech, even considering purchasing a nuclear power plant to power its machine learning algorithm. Clearly, they have a vested interest in pushing specifically their AI. Now, I’m sure the reader knows generally how AI machine learning algorithms work, but in case you don’t, or need a refresher, here’s the basics:

Software is developed to scrub the internet of relevant information, teaching a program everything from language to historical fun facts, often indiscriminately, and without the permission of the copyright holders. (Already not off to a great start)

This data is then stored, and algorithmically constructed to form a language model, a machine learning algorithm that can “Speak” to you the way a human could, in text form, about anything you ask. (provided your request is not flagged as “inappropriate”)

This language model can then be modified for different purposes, the purpose I’m touching on is the AI-powered personal assistant.

For AI to work, it needs data. Lots and lots of data, without it, it’s like a body without a brain. For an AI assistant to work, not only does it need an internet’s worth of data, but it also needs to know nearly everything it can about its user. Some basic, like a name and location, but also more personal, more exploitable things. Things like addresses, names of friends, hobbies, shopping habits, diet, preferred supermarket, your commute to work, your sleep schedule, what medications you take and when you take them, your health and exercise habits, even your income. These and more need to be known for the assistant to be of any use. How can you have your AI assistant remind you to take your medication every day if it doesn't know you take it? How could it help you pick which shirt to buy if it doesn’t know of your intentions to buy a shirt in the first place? How can it remind you to go to bed and also wake up if it doesn’t know when you go to bed and wake up? Without this information, most of which is given without the user even fully realizing, it’s useless, and won’t have a user base. And where is this information stored? Clearly not locally, your smartphone doesn't have 64 zetabytes of storage for the entire internet of which powers AI, so it needs to be stored on servers, which can be harvested by its hosts for all of your sweet sweet personal information, more amounts of it and of much more value than any data they would get from a thousand Google searches. Multiply this by a user base of billions, and there is serious money to be made off of you and your information. I know a lot of people don’t care about their internet privacy, but it’s far more important than just being served annoying advertisements. Do you really trust a company like Google to keep this data confidential? I’m certain that they aren’t the only company using this information. Selling data to other companies is a huge business in and of itself, and there is no doubt that Google is double, triple, quadruple dipping in that regard. AI is the newest tool for harvesting more of your personal information than ever before, data that is never stored properly and always finds its way to bad actors who exploit it even more than the tech giants who harvested it from you. Is this truly worth the cost of admission for a tool that is unreliable, awful for the environment, and is only possible through the theft of the collective efforts of humanity? All so a massive conglomerate can make even more billions of dollars each year? Not to me, and not to all that support the AntiAI movement. This is just one of many reasons why more people every day reject AI and what little it offers.

Ongoing AI Case Studies

We recently had a very talented writer join our team. They will remain anonymous for now.

This section will feature our latest work on discussing contemporary issues involving AI, with updates as the new story unfolds. These articles are 100% human written, feel free to check using an AI checker. We put in considerable time and effort with proper citations and try to limit our biases. Unlike the AI News Wall of Shame page, this is meant to explore deeper on why the most breaking news of AI concerns everyday people like YOU. You may see these articles eventually be referenced as past warning signs that mainstream media chose to ignore.

Here's the general format:

1. A brief background regarding what happened, including fact checking and summarizing exactly what transpired.

2. The pain points on why this AI technology was adopted irresponsibly and how it could affect you.

3. The risks of ongoing improper development and possible trajectories based within reason (no extreme fear-mongering).

4. How we can address this issue together [Next Steps].

Case Study #2: Report: European Union Artificial Intelligence Act Published, In Vigor August 1.

July 25, 2024

The European Union has published the full and final text for the EU AI Act in its Official Journal, as reported by TechCrunch. Since the new law will be enforceable starting on August 1 of this year (2024), all its provisions will be fully applicable in two years, but some will be implemented much earlier.

Why the EU AI Act?

So first, why is the EU taking a stance on AI right now? According to the European Commission, there is a list of reasons.

The proposed rules will:

- Address risks specifically created by AI applications;

- Prohibit AI practices that pose unacceptable risks;

- Determine a list of high-risk applications;

- Set clear requirements for AI systems used within high-risk applications;

- Define specific obligations for deployers and providers of high-risk AI applications;

- Require a conformity assessment before a given AI system is put into service or placed on the market;

- Put enforcement in place after a given AI system is placed into the market;

- Establish a governance structure at the European and national levels.

The reasoning seems sound. As the first major world organization to put legislation into place regulating artificial intelligence, institutions around the world race to introduce curbs for the technology, and the EU stands to lead the pack (should there ever be one). There is plenty of controversy surrounding this new legislation, which we’ll get into later.

EU AI Act in Practice

But let’s look at what the law means in practice.

What does the new EU AI Act entail? Here are some of the major aspects of the Act, who is most likely to be affected, how, and when:

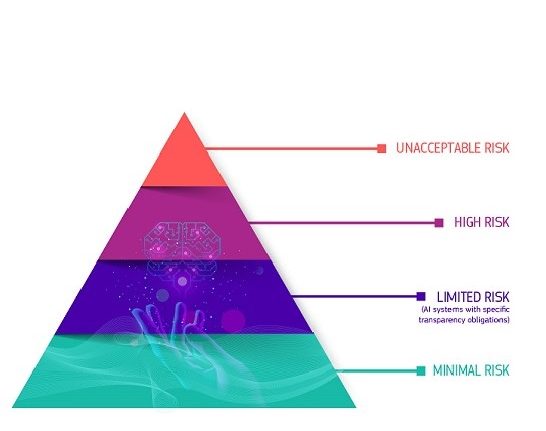

First, is AI risk classification. The European Commission defines four tiers of AI technology that this new law will affect.

First, is the unacceptable risk category. This category includes things like:

- Subliminal manipulation, i.e., influencing a person’s behavior without them being aware of it (think subliminal advertising to the nth degree). Another example could be AI-generated information that would influence a person to vote in a certain way.

- The exploitation of peoples’ vulnerabilities to hurt them. This could be a toy or game that influences a person to hurt themselves or others under the influence of AI-generated patterns.

- Biometric categorizations would include biases based on gender, political orientation, religion, sexual orientation, ethnicity, or philosophical beliefs.

- Social scoring (as is commonly done in China using AI-powered facial recognition technology) which could lead to discriminatory outcomes and the exclusion of certain groups.

- Public remote biometric identification that is not explicitly approved by the European Commission for law enforcement purposes.

- Assessing a person’s emotional state in the workplace or educational settings.

- Predictive policing meaning AI cannot be used to adjudicate in advance a person’s likelihood of committing a crime.

- Scraping facial images that could include creating or expanding databases using facial images on the internet available from video surveillance footage.

Those are the big eight NO-NOs that the EU has said will be unacceptable. These practices will be completely prohibited by the EU in all of its member states.

Next, we have high-risk AI systems. These will most likely be the most highly regulated since they are not explicitly prohibited. However, this level includes safety components of previously regulated products and systems in specific areas that could negatively affect peoples’ safety and/or health.

This has been one of the most contentious areas of discussion leading to the rollout of the new, approved law. It places a large burden on organizations already operating in the AI sphere, and therefore of course has a lot of public and private interests involved.

Some of the affected areas include biometrics and biometric-based systems, critical infrastructure management, education and vocational training, employment, and worker management tools, access to private and public sector benefits, law enforcement, migration, asylum and border patrol processes, and justice system administration. Of course, the lines of what constitutes “high risk” are blurry, which leads to the controversial aspects of this part of the legislation.

The final two levels of risk include limited risk, which means AI systems that may allow for manipulation or deceit. These systems must remain transparent with humans being informed of their interaction with the AI. A chatbot would be a good example of this category. Then there is the minimal risk category, all other AI systems not included in the previous three categories. They have no restrictions or mandatory obligations but are expected to abide by good judgment in case they should be elevated to a more stringent category.

This graphic should help to show how the regulation will work a little better:

Image credit: European Commission

So who cares what level you are right? Well… Article 99 of the law stipulates that “Non-compliance with the prohibition of the AI practices referred to in Article 5 (prohibited AI practices) shall be subject to administrative fines of up to 35 000 000 EUR or, if the offender is an undertaking, up to 7 % of its total worldwide annual turnover for the preceding financial year, whichever is higher.”

Of course, as with anything in the EU, this is subject to each member state’s national policies and procedures and may take time, but the punishment is there if the crime is grave enough. All governments are required to report any infringements to the European Commission as soon as they are perceived. In any case, as in major business operations generally, when the big players are able to pay their way out of the fines imposed, they will continue to act as they please.

Controversies and Major Players

So to put all this into practical implications, we wanted to look at what’s been going down with some of our “favorite” major AI innovators wishing to introduce new AI-based technology in EU member states, and which member states are generally for or against the legislation. Of course, for the law to pass, everybody had to be on board, but the horse trading has been going on for years.

For starters, Politico.eu reported that France, Germany, and Italy were dragging their feet as the law’s development was coming to a close. They argued that certain clauses regarding foundation models being penalized would hinder the EU’s own AI-based technology companies in today’s AI arms race. There was much controversy regarding where to draw the line on these major technologies, and ultimately, the regulation is still nebulous.

Brussels should get credit for being the first jurisdiction to pass regulation mitigating AI’s many risks, but there are several problems with the final agreement. According to Max Von Thun, Europe Director of the Open Markets Institute, thanks to those major players, there are "significant loopholes for public authorities" and "relatively weak regulation of the largest foundation models that pose the greatest harm." Foundational models are those like ChatGPT that use massive amounts of data to “learn” how to create the user’s desired outputs.

Another point that is floating around is that the act is a smoke screen and will be ineffective against major technology monopolies. Von Thun continued saying, “The AI Act is incapable of addressing the number one threat AI currently poses: its role in increasing and entrenching the extreme power a few dominant tech firms already have in our personal lives, our economies, and our democracies.”

The threat is not only coming from your massive global players like Meta (who have already rolled back some EU initiatives) but rather from companies inside the EU market, like France-based Mistral, who just partnered with Microsoft.

Ultimately, as Marianne Tordeux Bitker, public affairs chief at France Digitale puts it “While the AI Act responds to a major challenge in terms of transparency and ethics, it nonetheless creates substantial obligations for all companies using or developing artificial intelligence, despite a few adjustments planned for startups and SMEs, notably through regulatory sandboxes.”

What do you think about the EU AI Act? Reach out and let us know. We want to hear your thoughts and opinions. Is this as big of a deal as some politicians or EU parliamentarians are making it out to be? Or is it just a way to help major companies stay in the game while startups and small to medium-sized businesses have to cut through more red tape? We will find out more in the coming months, but I have a hunch AI may be bigger than even the EU at this point.

Case Study #1: Apple Intelligence

June 18, 2024

Apple, Inc. is a global mega-player in the technology industry. Founded by Steve Jobs and Steve Wozniak in 1976, the company began breaking barriers in product development and innovation with a focus on user-centric functionality. However, over time, that user-centric focus has seemingly gone by the wayside.

We’ve all had to deal with replacing new Apple product chargers - every device needs a new one! And then there are the system updates. It’s nearly impossible to stay ahead of their requirements. This is the point. If you use Apple products, these changes are required. The hardware and software are wired to implore you to do so. Now, they’ve implemented proprietary artificial intelligence, otherwise known as “Apple Intelligence”. While it will only be used on new versions of their products, the implications could be huge.

First, let’s take a look at the new agreement Apple, Inc. has made with OpenAI.

Details are still not public, but we are following this story closely. The legalities are nebulous and will certainly call attention.

When Apple Inc. Chief Executive Officer Tim Cook and his top deputies this week unveiled a landmark arrangement with OpenAI to integrate ChatGPT into the iPhone, iPad, and Mac, they did not disclose financial terms.

Finding information about the deal has proven difficult, but, this is what we’ve come up with so far.

Apple will install ChatGPT in its operating systems and Siri, impacting over 2 billion active devices worldwide. This extends the reach of OpenAI, which already has approximately 180 million active users, not to mention the millions who use Microsoft’s GPT-4-powered Copilot.

The partnership makes sense. iPhones or other Apple products already provide substantial information regarding maps, history, news, trivia – you name it, chances are you’ve taken out your phone to check out some stats.

Plus, in the technology universe, Apple up until recently has been mocked for being behind the curve regarding AI developments as compared to other industry giants like Amazon, Meta, Google, and Microsoft. It was time for them to prove their technological innovations.

This change will influence the entire industry.

We are in a new era. Phones and other end-point devices are listening. Helping! Providing insights and solving arguments with data. The pain points are clear. We’re all pressed for time, and having information at our fingertips is infinitely useful. Whether you need directions or a Wikipedia page, having the information available is very helpful and reliable.

We understand the need for this technology but we also see some risks. And it’s not just us. The following comes from an open letter from AI practitioners:

“We are current and former employees at frontier AI companies, and we believe in the potential of AI technology to deliver unprecedented benefits to humanity.

We also understand the serious risks posed by these technologies. These risks range from the further entrenchment of existing inequalities, to manipulation and misinformation, to the loss of control of autonomous AI systems potentially resulting in human extinction. AI companies themselves have acknowledged these risks.”

So what is Apple going for? Why are they okay with this? Where will they be gaining additional revenue? And what can we do about it?

The following excerpt from a recent Forbes article explains it well:

“On May 14, co-founder and former Chief Scientist, Ilya Sutskever, and former Head of Alignment, Jan Leike, announced that they were leaving OpenAI. Sutskever and Leike were co-leading OpenAI’s Superalignment team, which seeks to not only align AI behaviors and objectives with human values and objectives but also prevent super-intelligent AI from “going rogue.”

Super-alignment is becoming increasingly important as artificial general intelligence (AGI) becomes an ever-increasing possibility. Their concurrent departures may be signaling that OpenAI may not be serious enough about safety. For example, Chat GPT (Open AI’s property) can be used to train and fine-tune Open AI models. While enterprises can opt out of sharing their conversations to train new models, the organization still requires the organization's ID and the email address associated with the account owner. They still retain the data and do not explicitly share how they protect said data.

Leike posted on X that “safety culture and processes have taken a backseat to shiny products,” and added in another X post: “I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time, until we finally reached a breaking point.”

At this moment, we are still seeking information. Elon Musk is already attacking the deal. He argued that integrating OpenAI’s software poses a security risk, suggesting that Apple cannot fully protect user data once it is shared with OpenAI.

Apple is working to stay ahead in the AI markets, competing with major companies like Microsoft, which they overtook on June 12, 2024, to become the world’s most valuable company.

But what does this mean for us?

From personal experience, AI can and does go rogue. Having it automatically installed on your end-point devices means all of your data and personal information can be easily accessed, especially since apple intelligence has the ability to act with cross-app functionality. As new technologies evolve, it is really difficult to stay ahead of traditional hackers, advertisers, and even AI itself.

Just today, I had to reset two Apple devices (2015 and 2022) and it took hours to ensure my data was saved and protected.

Companies are working to safeguard devices, but how do we know from whom and when? With access to your endpoints, it is very difficult to control how AI is using your system. It requires an entire team working 24/7 to ensure all network endpoints are secure.

Then, add the human element. If people in an organization are not following best security practices (which are constantly changing) they can be points of vulnerability as well.

This is NOT anti-Apple. The point of this article is to let you know what is going on and provide updated information as we follow this story. Be sure to read the terms of service before you sign anything or hand over your personal data!

This is an opportunity if anything to stand together against major corporations and hold them accountable for end-users’ data security. We understand that sometimes it’s easier to just sign on the dotted line and worry about the consequences when they come knocking, but we are working to:

- Empower consumers to take care of their extremely valuable data.

- Maintain individual rights in the face of massive profit-driven technology companies.

- Stay informed of major cases against said companies.

- Hold government accountable to their constituents despite massive lobbying campaigns.

This is the beginning of a major change in how big technology companies operate, and we want you to be a part of it. Some examples include a suit against Microsoft in 2022 (the first major change!), after which followed more suits against Microsoft and OpenAI through the “unlawful use of the Times’s work to create artificial intelligence products that compete with it” and “threatens the Times’s ability to provide that service.”

However, despite multiple lawsuits from national governments, organizations like Meta, Google, Microsoft, Alphabet, Amazon, and Apple have been able to pay to get off scot-free. The strategy is simple, use monopolistic practices to win the market, monetize, then pay for the slap on the wrist by governments.

This is just the beginning, but we’re here to provide information from informed research. If you are interested in contacting us regarding a personal story of AI, please reach out!

Believe in my cause? PLEASE send me a donation!

Feel free to reach out if you would be to be featured and share why you care about sharing your perspective.

Currently, donations are the life blood of this movement, we are not government funded and we do not have deep pockets

Zelle transfer: 01@AntiAI.com

As of this moment, donations are not tax deductible***

We need your consent to load the translations

We use a third-party service to translate the website content that may collect data about your activity. Please review the details in the privacy policy and accept the service to view the translations.